Security Can Be Simple

Given the stakes, ensuring the security of a software application can be a daunting task. Breaches of application perimeters and data stores are often dramatic and devastating. Besides the immediate consequences, such as data loss and the need for remediation, these incidents usually have a negative impact on trust between consumers and the business, and between the business and its technologists.

Security Challenges

Securing cloud native serverless applications can be particularly challenging for several reasons, including:

Managed services

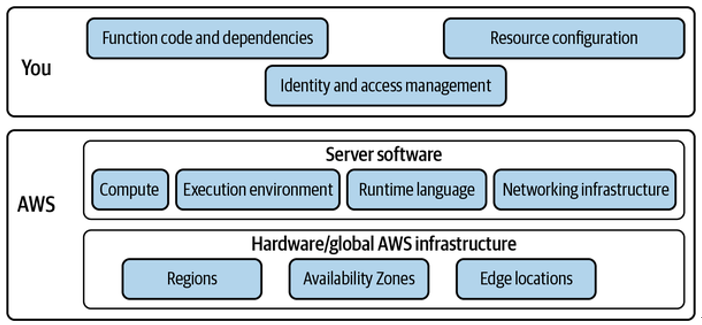

Throughout this book, you will see that managed services are core to serverless applications and, when applied correctly, can support clear separation of concerns, optimal performance, and effective observability. While managed services provide a solid foundation for your infrastructure, as well as several security benefits—primarily through the shared responsibility model, discussed later in this chapter—the sheer number of them available to teams building on AWS presents a problem: in order to utilize (or even evaluate) a managed service, you must first understand the available features, pricing model, and, crucially, security implications. How do IAM permissions work for this service? How is the shared responsibility model applied to this service? How will access control and encryption work?

Configurability

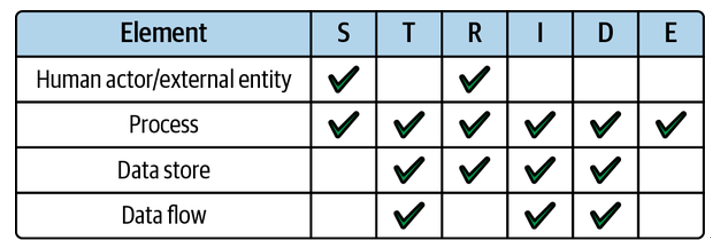

An aspect all managed services share is configurability. Every AWS service has an array of options that can be tweaked to optimize throughput, latency, resiliency, and cost. The combination of services can also yield further optimizations, such as the employment of SQS queues between Lambda functions to provide batching and buffering. Indeed, one of the primary benefits of serverless that is highlighted in this book is granularity. As you’ve seen, you have the ability to configure each of the managed services in your applications to a fine degree. In terms of security, this represents a vast surface area for the inadvertent introduction of flaws like excessive permissions and privilege escalation.

Emergent standards

AWS delivers new services, new features, and improvements to existing features and services at a consistently high rate. These new services and features could either be directly related to application or account security or present new attack vectors to analyze and secure. There are always new levers to pull and more things to configure. The community around AWS and, in particular, serverless also moves at a relatively fast pace, with new blog posts, video tutorials, and conference talks appearing every day. The security aspect of software engineering perhaps moves slightly slower than other elements, but there is still a steady stream of advice from cybersecurity professionals along with regular releases of vulnerability disclosures and associated research. Keeping up with all the AWS product updates and the best practices when it comes to securing your ever-evolving application can easily become one of your biggest challenges.

While cloud native serverless applications present unique security challenges, there are also plenty of inherent benefits when it comes to securing this type of software. The architecture of serverless applications introduces a unique security framework and provides the potential to work in a novel way within this framework. You have a chance to redefine your relationship to application security. Security can be simple.

Next, let’s explore how to start securing your serverless application.