Securing REST APIs with Amazon Cognito

There are of course many access management services and identity providers available, including Okta and Auth0. We’ll focus on using Cognito to secure a REST API, as it is native to AWS and for this reason provides minimal overhead.

Amazon Cognito

Before we dive in, let’s define the building blocks you will need. Cognito is often viewed as one of the most complex AWS services. It is therefore important to have a foundational understanding of Cognito’s components and a clear idea of the access control architecture you are aiming for. Here are the key components for implementing access control using Cognito:

User pools

A user pool is a user directory in Amazon Cognito. Typically you will have a single user pool in your application. This user pool can be used to manage all the users of your application, whether you have a single user or multiple users.

Application clients

You may be building a traditional client/server web application where you maintain a frontend web application and a backend API. Or you may be operating a multitenant business-to-business platform, where tenant backend services use a client credentials grant to access your services. In this case, you can create an application client for each tenant and share the client ID and secret with the tenant backend service for machine-to-machine authentication.

Scopes

Scopes are used to control an application client’s access to specific resources in your application’s API.

Application-Based Multitenancy

Multitenancy is an identity and access management architecture to support sharing the underlying resources of an application between multiple groups of users, or tenants. Conversely, in a single-tenant architecture each tenant is assigned to a separate instance of the application running on distinct infrastructure. Although tenants share the same infrastructure in multitenancy, their data is completely separated and never shared between tenants.

Multitenancy is a very common model that is often used in SaaS products and by cloud vendors themselves, including AWS. Multitenancy is also complementary to a zero trust architecture, where a more granular access control model is required. Consider a single consumer of your API: it is likely the consumer will have multiple services of its own consuming multiple API resources. In this scenario, a secure, zero trust approach would be to isolate usage of the API to consuming services, granting only the minimum permissions needed by that individual service to perform its requests of the API.

Application-based multitenancy is a technique that will allow your application’s access control to scale, regardless of whether you start with a single user or multiple tenants. Each tenant of your application is assigned at least one application client and a set of scopes that determine the resources the app client can access. If you never scale beyond a single user, adopting this architecture will still serve you well. Take a look at the Cognito documentation for more information about implementing app client–based multitenancy.

Cognito and API Gateway

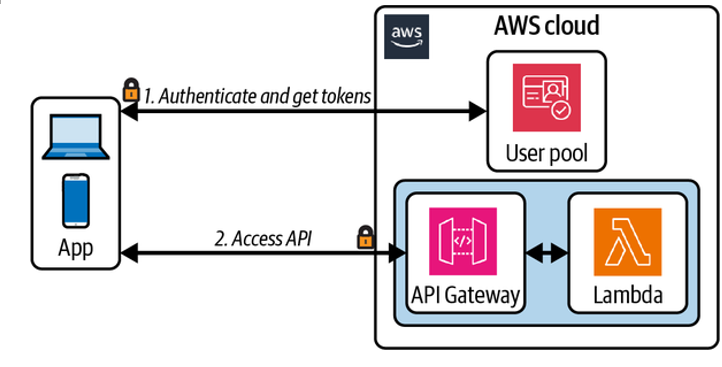

Cognito authorizers provide a fully managed access control integration with API Gateway, as illustrated in Figure 4-5. API consumers exchange their credentials (a client ID and secret) for access tokens via a Cognito endpoint. These access tokens are then included with API requests and validated via the Cognito authorizer.

Figure 4-5. API Gateway Cognito authorizer

Additionally, an API endpoint can be assigned a scope. When authorizing a request to the endpoint, the Cognito authorizer will verify the endpoint’s scope is included in the client’s list of permitted scopes.